Running large language models locally has never been easier. Docker Model Runner brings the simplicity of container workflows to AI models - pull, run, and interact with LLMs using familiar Docker commands. This guide walks you through everything you need to get started.

Why Run LLMs Locally?

Before diving in, here's why local LLMs matter:

- •Privacy: Your data never leaves your machine

- •No API costs: Zero usage fees or rate limits

- •Offline access: Works without internet connectivity

- •Low latency: No network round-trips

Prerequisites

You'll need:

- •Docker Desktop 4.40+ (with Model Runner support)

- •8GB RAM minimum (32GB recommended for larger models)

- •20GB+ free disk space for model storage

Verify your Docker installation:

1docker --versionEnabling Docker Model Runner

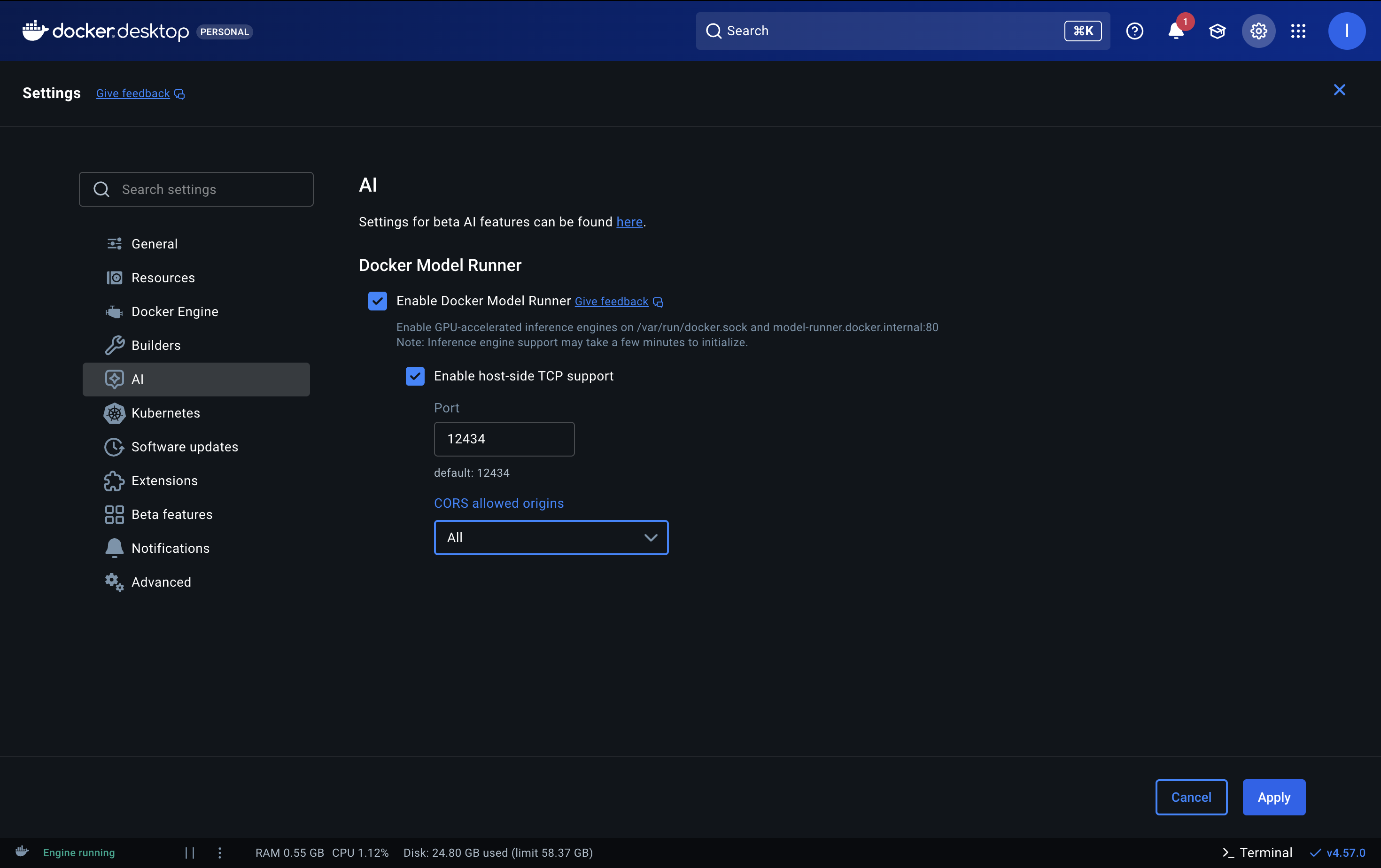

Open Docker Desktop and navigate to Settings > AI. Enable Docker Model Runner and check host-side TCP support to access the API from your host machine. The default port is 12434, which you can change if needed. For CORS allowed origins, set it to All to allow requests from any origin, or configure a custom value if you want to restrict access to specific domains.

Click Apply and wait for Docker to restart. The inference engine may take a few minutes to initialize.

You can verify it's working by running:

1docker model list

2

3# If the command isn't recognized, you may need to link the CLI plugin:

4ln -s /Applications/Docker.app/Contents/Resources/cli-plugins/docker-model ~/.docker/cli-plugins/docker-modelUnderstanding Inference Engines

Docker Model Runner supports two inference engines:

llama.cpp is the default and works everywhere - macOS (Apple Silicon), Windows, and Linux. It's optimized for running quantized models efficiently on consumer hardware.

vLLM is designed for production workloads with high throughput requirements. It requires NVIDIA GPUs on Linux x86_64 or Windows WSL2.

For most local development, llama.cpp is the right choice.

Model Selection Guide

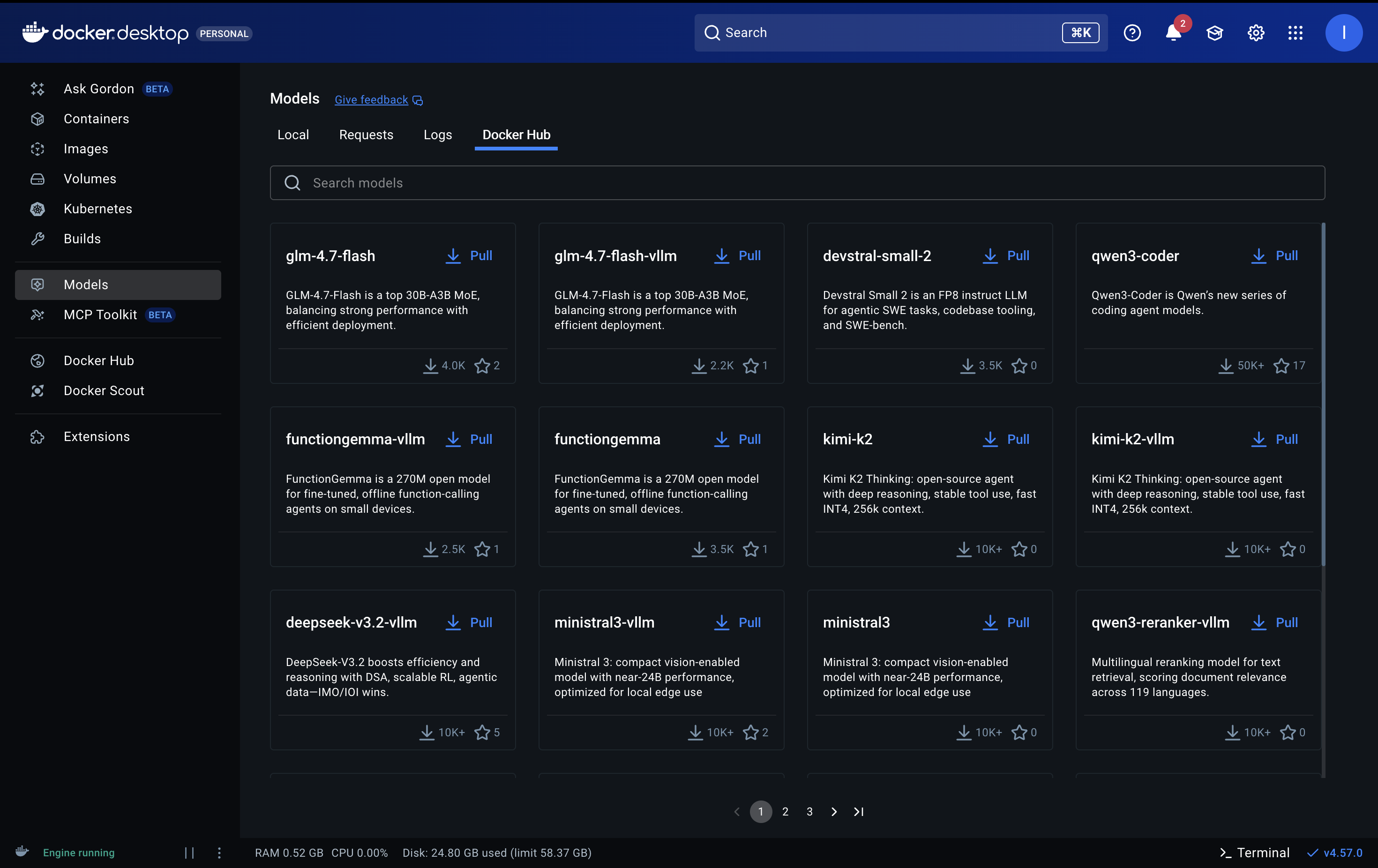

Before pulling models, it helps to understand what's available. You can browse models directly in Docker Desktop by navigating to Models > Docker Hub. This gives you a visual catalog with descriptions, download counts, and one-click pulling.

Choosing the right model depends on your hardware and use case:

Lightweight Models (Under 3B parameters)

Fast responses, minimal resource usage. Ideal for development, testing, and edge devices.

| Model | Parameters | Size | Best For |

|---|---|---|---|

ai/smollm2 | 360M | ~256MB | Quick prototyping, constrained devices |

ai/llama3.2:1B | 1B | ~1.3GB | On-device apps, summarization |

ai/llama3.2:3B | 3B | ~2GB | Instruction following, tool calling |

1# SmolLM2 - Ultra-lightweight, great for testing

2docker model pull smollm2

3

4# Llama 3.2 1B - Best quality for size

5docker model pull llama3.2:1B-Q8_0

6

7# Llama 3.2 3B - Outperforms many larger models on specific tasks

8docker model pull llama3.2:3B-Q4_K_MMid-Range Models (7B-14B parameters)

Better quality, requires more resources. Good for most practical applications.

| Model | Parameters | Size | Best For |

|---|---|---|---|

ai/mistral | 7B | ~4.4GB | General purpose, enterprise use |

ai/gemma3 | 9B | ~2.5GB | Research, reasoning tasks |

ai/phi4 | 14B | ~9GB | Complex reasoning |

ai/qwen2.5 | 7B | ~4.4GB | Multilingual, coding |

1# Mistral 7B - Excellent all-around model

2docker model pull mistral

3

4# Qwen 2.5 - Great for code generation

5docker model pull qwen2.5Large Models (70B+ parameters)

Highest quality output, requires powerful hardware (32GB+ RAM recommended).

| Model | Parameters | Size | Best For |

|---|---|---|---|

ai/llama3.3 | 70B | ~42GB | Complex tasks, near-frontier quality |

ai/deepseek-r1-distill-llama | 70B | ~5GB | Advanced reasoning |

1# Llama 3.3 70B - State-of-the-art open model

2docker model pull llama3.3Understanding Quantization

Model names often include a quantization suffix (like Q4_K_M or Q8_0) that indicates compression level:

- •Q8_0: Highest quality, largest file size

- •Q4_K_M: Good balance of quality and efficiency (recommended for most users)

- •Q4_0: Smallest size, slightly lower quality

For most use cases, Q4_K_M offers the best trade-off between quality and performance.

Pulling Your First Model

Models are pulled from Docker Hub, just like container images:

1# Pull a lightweight model for testing

2docker model pull smollm2

3

4# Or pull Llama 3.2 1B for better quality

5docker model pull llama3.2:1B-Q8_0

6

7# List your downloaded models

8docker model listModels are cached locally after the first download, so subsequent runs are instant.

Running a Model

Start a model with a simple command:

1docker model run llama3.2:1B-Q8_0This starts an interactive chat session in your terminal. Type your prompts and get responses directly.

Interacting via API

Docker Model Runner provides OpenAI-compatible, Anthropic-compatible, and Ollama-compatible APIs. This means you can use existing SDKs and tools without modification.

API Endpoints

| From | Base URL |

|---|---|

| Host machine | http://localhost:12434 |

| Inside containers | http://model-runner.docker.internal |

For OpenAI SDK compatibility, use: http://localhost:12434/engines/v1

Using cURL

1curl http://localhost:12434/engines/v1/chat/completions \

2 -H "Content-Type: application/json" \

3 -d '{

4 "model": "ai/smollm2",

5 "messages": [

6 {"role": "system", "content": "You are a helpful assistant."},

7 {"role": "user", "content": "What is Docker?"}

8 ],

9 "temperature": 0.7,

10 "max_tokens": 500

11 }'Using Python with OpenAI SDK

Since the API is OpenAI-compatible, you can use the official OpenAI Python library:

1from openai import OpenAI

2

3client = OpenAI(

4 base_url="http://localhost:12434/engines/v1",

5 api_key="not-needed" # No API key required for local models

6)

7

8response = client.chat.completions.create(

9 model="ai/smollm2",

10 messages=[

11 {"role": "system", "content": "You are a helpful assistant."},

12 {"role": "user", "content": "Explain Docker containers in simple terms."}

13 ],

14 temperature=0.7,

15 max_tokens=500

16)

17

18print(response.choices[0].message.content)Streaming Responses

For real-time output, enable streaming:

1from openai import OpenAI

2

3client = OpenAI(

4 base_url="http://localhost:12434/engines/v1",

5 api_key="not-needed"

6)

7

8stream = client.chat.completions.create(

9 model="ai/smollm2",

10 messages=[

11 {"role": "user", "content": "Write a haiku about coding."}

12 ],

13 stream=True

14)

15

16for chunk in stream:

17 if chunk.choices[0].delta.content:

18 print(chunk.choices[0].delta.content, end="", flush=True)Building a Simple Chatbot

Let's build a complete example - a terminal chatbot that maintains conversation history:

1from openai import OpenAI

2

3def create_chatbot():

4 client = OpenAI(

5 base_url="http://localhost:12434/engines/v1",

6 api_key="not-needed"

7 )

8

9 messages = [

10 {"role": "system", "content": "You are a helpful AI assistant. Be concise and friendly."}

11 ]

12

13 print("Local LLM Chatbot (type 'quit' to exit)")

14 print("-" * 40)

15

16 while True:

17 user_input = input("\nYou: ").strip()

18

19 if user_input.lower() in ['quit', 'exit', 'q']:

20 print("Goodbye!")

21 break

22

23 if not user_input:

24 continue

25

26 messages.append({"role": "user", "content": user_input})

27

28 try:

29 response = client.chat.completions.create(

30 model="ai/smollm2",

31 messages=messages,

32 temperature=0.7,

33 max_tokens=1000

34 )

35

36 assistant_message = response.choices[0].message.content

37 messages.append({"role": "assistant", "content": assistant_message})

38

39 print(f"\nAssistant: {assistant_message}")

40

41 except Exception as e:

42 print(f"\nError: {e}")

43 messages.pop() # Remove failed user message

44

45if __name__ == "__main__":

46 create_chatbot()Save this as chatbot.py and run it:

1pip install openai

2python chatbot.pyUsing from Docker Containers

If your application runs in a Docker container, use the internal hostname:

1from openai import OpenAI

2

3client = OpenAI(

4 base_url="http://model-runner.docker.internal/engines/v1",

5 api_key="not-needed"

6)This allows containerized applications to access the local LLM without exposing ports to the host.

Troubleshooting

Model won't start

1# Check if Docker Model Runner is enabled

2docker model list

3

4# Check Docker Desktop logs for errorsSlow responses

- •Try a smaller model or higher quantization (Q4 instead of Q8)

- •Ensure no other heavy processes are running

- •Check if GPU acceleration is being used

Out of memory

- •Use a smaller model

- •Increase Docker's memory allocation in Docker Desktop settings

- •Try a more aggressive quantization level

Conclusion

Docker Model Runner makes local LLM development accessible to everyone. With just a few commands, you can pull and run various open-source models, integrate with applications using familiar OpenAI-compatible APIs, and develop and test without cloud dependencies or costs.

Start with a small model like ai/smollm2 or ai/llama3.2:1B-Q8_0 to get familiar with the workflow, then scale up to larger models as your needs grow.

The combination of Docker's simplicity and local LLM capabilities opens up possibilities for privacy-focused applications, offline tools, and cost-effective AI development. The learning curve is minimal if you already know Docker.

The only question is what you will build with them!